基本思想:记录一下上次工业检测项目的Android推流代码,客户要求在终端检测结果推到服务器上,以最大化发挥终端的算力,而把服务器只当做中转站~

一、先将up的GitHub - nihui/ncnn-android-nanodet代码跑起来,导入nihui大佬的ncnn库和opencv-mobile库即可

二、在linux系统上构建Android的交叉编译环境1、Android 移植C++ 开发的第三方.so包和.a包_sxj731533730-CSDN博客_c++开发安卓

三、使用交叉编译环境去编译Android 版的ffmpeg静态包或者动态包 8、Linuix\Android进行视频获取和推流服务器(FFMPEG4.4&Android7.0)_sxj731533730-CSDN博客_android从服务器获取视频

(1)官网的x264代码貌似有问题,贴一下x264代码

git clone https://github.com/sxj731533730/x264.git在linux系统进行X264进行交叉编译命令

#!/bin/bash

export NDK=/usr/local/android-ndk-r21e

TOOLCHAIN=$NDK/toolchains/llvm/prebuilt/linux-x86_64

export API=21

function build_android

{

./configure \

--prefix=$PREFIX \

--disable-cli \

--disable-asm \

--enable-static \

--enable-pic \

--host=$my_host \

--cross-prefix=$CROSS_PREFIX \

--sysroot=$NDK/toolchains/llvm/prebuilt/linux-x86_64/sysroot \

make clean

make -j8

make install

}

#x86

PREFIX=./android/x86

my_host=i686-linux-android

export TARGET=i686-linux-android

export CC=$TOOLCHAIN/bin/$TARGET$API-clang

export CXX=$TOOLCHAIN/bin/$TARGET$API-clang++

CROSS_PREFIX=$TOOLCHAIN/bin/i686-linux-android-

build_android

#armeabi-v7a

PREFIX=./android/armeabi-v7a

my_host=armv7a-linux-android

export TARGET=armv7a-linux-androideabi

export CC=$TOOLCHAIN/bin/$TARGET$API-clang

export CXX=$TOOLCHAIN/bin/$TARGET$API-clang++

CROSS_PREFIX=$TOOLCHAIN/bin/arm-linux-androideabi-

build_android

#arm64-v8a

PREFIX=./android/arm64-v8a

my_host=aarch64-linux-android

export TARGET=aarch64-linux-android

export CC=$TOOLCHAIN/bin/$TARGET$API-clang

export CXX=$TOOLCHAIN/bin/$TARGET$API-clang++

CROSS_PREFIX=$TOOLCHAIN/bin/aarch64-linux-android-

build_android

#x86_64

PREFIX=./android/x86_64

my_host=x86_64-linux-android

export TARGET=x86_64-linux-android

export CC=$TOOLCHAIN/bin/$TARGET$API-clang

export CXX=$TOOLCHAIN/bin/$TARGET$API-clang++

CROSS_PREFIX=$TOOLCHAIN/bin/x86_64-linux-android-

build_android

(2)ffmpeg代码

git clone https://github.com/FFmpeg/FFmpeg.git需要修改一下 FFmpeg/libavutil/x86/asm.h

//#define HAVE_7REGS (ARCH_X86_64 || (HAVE_EBX_AVAILABLE && HAVE_EBP_AVAILABLE)) 注释掉

#define HAVE_7REGS (ARCH_X86_64 ) //更改为编译命令

#!/bin/bash

export NDK=/usr/local/android-ndk-r21e

TOOLCHAIN=$NDK/toolchains/llvm/prebuilt/linux-x86_64

function build_android

{

./configure \

--prefix=$PREFIX \

--enable-neon \

--enable-hwaccels \

--enable-gpl \

--disable-postproc \

--disable-static \

--enable-shared \

--disable-debug \

--enable-small \

--enable-jni \

--enable-mediacodec \

--disable-doc \

--enable-ffmpeg \

--disable-ffplay \

--disable-ffprobe \

--disable-avdevice \

--disable-doc \

--disable-symver \

--enable-libx264 \

--enable-encoder=libx264 \

--enable-nonfree \

--enable-muxers \

--enable-decoders \

--enable-demuxers \

--enable-parsers \

--enable-protocols \

--cross-prefix=$CROSS_PREFIX \

--target-os=android \

--arch=$ARCH \

--cpu=$CPU \

--cc=$CC \

--cxx=$CXX \

--enable-cross-compile \

--sysroot=$SYSROOT \

--extra-cflags="-I$X264_INCLUDE -Os -fpic $OPTIMIZE_CFLAGS" \

--extra-ldflags="-lm -L$X264_LIB $ADDI_LDFLAGS"

make clean

make -j8

make install

echo "The Compilation of FFmpeg with x264 $CPU is completed"

}

#armv8-a

ARCH=arm64

CPU=armv8-a

API=21

CC=$TOOLCHAIN/bin/aarch64-linux-android$API-clang

CXX=$TOOLCHAIN/bin/aarch64-linux-android$API-clang++

SYSROOT=$NDK/toolchains/llvm/prebuilt/linux-x86_64/sysroot

CROSS_PREFIX=$TOOLCHAIN/bin/aarch64-linux-android-

PREFIX=$(pwd)/android/$CPU

OPTIMIZE_CFLAGS="-mfloat-abi=softfp -mfpu=vfp -marm -march=$CPU "

BASE_PATH=/home/ubuntu

LIB_TARGET_ABI=arm64-v8a

X264_INCLUDE=$BASE_PATH/x264/android/$LIB_TARGET_ABI/include

X264_LIB=$BASE_PATH/x264/android/$LIB_TARGET_ABI/lib

build_android

cp $X264_LIB/libx264.a $PREFIX/lib

#armv7-a

ARCH=arm

CPU=armv7-a

API=21

CC=$TOOLCHAIN/bin/armv7a-linux-androideabi$API-clang

CXX=$TOOLCHAIN/bin/armv7a-linux-androideabi$API-clang++

SYSROOT=$NDK/toolchains/llvm/prebuilt/linux-x86_64/sysroot

CROSS_PREFIX=$TOOLCHAIN/bin/arm-linux-androideabi-

PREFIX=$(pwd)/android/$CPU

OPTIMIZE_CFLAGS="-mfloat-abi=softfp -mfpu=vfp -marm -march=$CPU "

BASE_PATH=/home/ubuntu

LIB_TARGET_ABI=armeabi-v7a

X264_INCLUDE=$BASE_PATH/x264/android/$LIB_TARGET_ABI/include

X264_LIB=$BASE_PATH/x264/android/$LIB_TARGET_ABI/lib

build_android

cp $X264_LIB/libx264.a $PREFIX/lib

#x86

ARCH=x86

CPU=x86

API=21

CC=$TOOLCHAIN/bin/i686-linux-android$API-clang

CXX=$TOOLCHAIN/bin/i686-linux-android$API-clang++

SYSROOT=$NDK/toolchains/llvm/prebuilt/linux-x86_64/sysroot

CROSS_PREFIX=$TOOLCHAIN/bin/i686-linux-android-

PREFIX=$(pwd)/android/$CPU

OPTIMIZE_CFLAGS="-march=i686 -mtune=intel -mssse3 -mfpmath=sse -m32"

BASE_PATH=/home/ubuntu

LIB_TARGET_ABI=x86

X264_INCLUDE=$BASE_PATH/x264/android/$LIB_TARGET_ABI/include

X264_LIB=$BASE_PATH/x264/android/$LIB_TARGET_ABI/lib

build_android

cp $X264_LIB/libx264.a $PREFIX/lib

#x86_64

ARCH=x86_64

CPU=x86-64

API=21

CC=$TOOLCHAIN/bin/x86_64-linux-android$API-clang

CXX=$TOOLCHAIN/bin/x86_64-linux-android$API-clang++

SYSROOT=$NDK/toolchains/llvm/prebuilt/linux-x86_64/sysroot

CROSS_PREFIX=$TOOLCHAIN/bin/x86_64-linux-android-

PREFIX=$(pwd)/android/$CPU

OPTIMIZE_CFLAGS="-march=$CPU -mtune=intel -msse4.2 -mpopcnt -m64"

BASE_PATH=/home/ubuntu

LIB_TARGET_ABI=x86_64

X264_INCLUDE=$BASE_PATH/x264/android/$LIB_TARGET_ABI/include

X264_LIB=$BASE_PATH/x264/android/$LIB_TARGET_ABI/lib

build_android

cp $X264_LIB/libx264.a $PREFIX/lib

然后生成了库文件

ubuntu@ubuntu:~/FFmpeg/android$ tree -L 3

.

├── armv7-a

│ ├── bin

│ │ └── ffmpeg

│ ├── include

│ │ ├── libavcodec

│ │ ├── libavfilter

│ │ ├── libavformat

│ │ ├── libavutil

│ │ ├── libswresample

│ │ └── libswscale

│ ├── lib

│ │ ├── libavcodec.so

│ │ ├── libavfilter.so

│ │ ├── libavformat.so

│ │ ├── libavutil.so

│ │ ├── libswresample.so

│ │ ├── libswscale.so

│ │ ├── libx264.a

│ │ └── pkgconfig

│ └── share

│ └── ffmpeg

├── armv8-a

│ ├── bin

│ │ └── ffmpeg

│ ├── include

│ │ ├── libavcodec

│ │ ├── libavfilter

│ │ ├── libavformat

│ │ ├── libavutil

│ │ ├── libswresample

│ │ └── libswscale

│ ├── lib

│ │ ├── libavcodec.so

│ │ ├── libavfilter.so

│ │ ├── libavformat.so

│ │ ├── libavutil.so

│ │ ├── libswresample.so

│ │ ├── libswscale.so

│ │ ├── libx264.a

│ │ └── pkgconfig

│ └── share

│ └── ffmpeg

├── x86

│ ├── bin

│ │ └── ffmpeg

│ ├── include

│ │ ├── libavcodec

│ │ ├── libavfilter

│ │ ├── libavformat

│ │ ├── libavutil

│ │ ├── libswresample

│ │ └── libswscale

│ ├── lib

│ │ ├── libavcodec.so

│ │ ├── libavfilter.so

│ │ ├── libavformat.so

│ │ ├── libavutil.so

│ │ ├── libswresample.so

│ │ ├── libswscale.so

│ │ ├── libx264.a

│ │ └── pkgconfig

│ └── share

│ └── ffmpeg

└── x86-64

├── bin

│ └── ffmpeg

├── include

│ ├── libavcodec

│ ├── libavfilter

│ ├── libavformat

│ ├── libavutil

│ ├── libswresample

│ └── libswscale

├── lib

│ ├── libavcodec.so

│ ├── libavfilter.so

│ ├── libavformat.so

│ ├── libavutil.so

│ ├── libswresample.so

│ ├── libswscale.so

│ ├── libx264.a

│ └── pkgconfig

└── share

└── ffmpeg

52 directories, 32 files3、修改一下目录名称,ffmpeg的对应生成文件夹的名字

armv-8a armv-7a x86 x86-64修改之后的名字

arm64-v8a armeabi-v7a x86 x86_64我建立一个总的文件夹来放这些库 ffmpeg-mobile-20220107-android

4、然后在ncnn-android-nanodet 代码基础上,添加我们自己编译的ffmpeg库

需要在src目录下建立jniLibs/libs/目录,然后江对应的动态库提取出来,如下图所示

在build.gradle添加

sourceSets{

main{

jniLibs.srcDirs=["src/main/jniLibs/libs"]

}

}

然后cmakelists.txt导入一下即可

project(nanodetncnn)

cmake_minimum_required(VERSION 3.10)

set(OpenCV_DIR ${CMAKE_SOURCE_DIR}/opencv-mobile-4.5.4-android/sdk/native/jni)

find_package(OpenCV REQUIRED core imgproc)

set(ncnn_DIR ${CMAKE_SOURCE_DIR}/ncnn-20211208-android-vulkan/${ANDROID_ABI}/lib/cmake/ncnn)

find_package(ncnn REQUIRED)

include_directories(${CMAKE_SOURCE_DIR}/ffmpeg-mobile-20220107-android/${ANDROID_ABI}/include)

add_library(libavformat SHARED IMPORTED)

set_target_properties(libavformat PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/../jniLibs/libs/${ANDROID_ABI}/libavformat.so)

add_library(libavcodec SHARED IMPORTED)

set_target_properties(libavcodec PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/../jniLibs/libs/${ANDROID_ABI}/libavcodec.so)

add_library(libavfilter SHARED IMPORTED)

set_target_properties(libavfilter PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/../jniLibs/libs/${ANDROID_ABI}/libavfilter.so)

add_library(libavutil SHARED IMPORTED)

set_target_properties(libavutil PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/../jniLibs/libs/${ANDROID_ABI}/libavutil.so)

add_library(libswresample SHARED IMPORTED)

set_target_properties(libswresample PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/../jniLibs/libs/${ANDROID_ABI}/libswresample.so)

add_library(libswscale SHARED IMPORTED)

set_target_properties(libswscale PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/../jniLibs/libs/${ANDROID_ABI}/libswscale.so)

add_library(X264 STATIC IMPORTED)

set_target_properties(X264 PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/../jniLibs/libs/${ANDROID_ABI}/libx264.a)

add_library(nanodetncnn SHARED nanodetncnn.cpp nanodet.cpp ndkcamera.cpp)

find_library( # Sets the name of the path variable.

log-lib

# Specifies the name of the NDK library that

# you want CMake to locate.

log )

target_link_libraries(nanodetncnn

ncnn

${OpenCV_LIBS}

camera2ndk

mediandk

libavformat

libavcodec

libavfilter

libavutil

libswresample

libswscale

X264

${log-lib}

)

因为整个ffmpeg库我直接拷贝过来了,头文件就混在另一个文件中,请自行选择位置导入

,下面是我的目录结构

先测试一下,是否影响原来的功能,如果不影响,开始下一步,写推流pc端的代码

四、在window10上搭建一个Nginx服务器 参考文档 http://nginx-win.ecsds.eu/download/documentation-pdf/nginx%20for%20Windows%20-%20documentation%201.8.pdf

(1)、下载压缩包 http://nginx-win.ecsds.eu/download/nginx%201.7.11.3%20Gryphon.zip 修改文件夹的名字 nginx_1.7.11.3_Gryphon并且解压到D盘

(2)、然后进入解压目录

G:\ffmpeg_demo>d:

D:\>cd nginx_1.7.11.3_Gryphon

D:\nginx_1.7.11.3_Gryphon>git clone https://github.com/arut/nginx-rtmp-module

Cloning into 'nginx-rtmp-module'...

remote: Enumerating objects: 4324, done.

remote: Counting objects: 100% (7/7), done.

remote: Compressing objects: 100% (7/7), done.

remote: Total 4324 (delta 1), reused 3 (delta 0), pack-reused 4317

Receiving objects: 100% (4324/4324), 3.11 MiB | 2.38 MiB/s, done.

Resolving deltas: 100% (2689/2689), done.(3)在目录 nginx-1.7.11.3_Gryphon\conf下创建 nginx-win-rtmp.conf配置文件

#user nobody;

# multiple workers works !

worker_processes 2;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 8192;

# max value 32768, nginx recycling connections+registry optimization =

# this.value * 20 = max concurrent connections currently tested with one worker

# C1000K should be possible depending there is enough ram/cpu power

# multi_accept on;

}

rtmp {

server {

listen 1935;#监听端口,若被占用,可以更改

chunk_size 4000;#上传flv文件块儿的大小

application live { #创建一个叫live的应用

live on;#开启live的应用

allow publish 127.0.0.1;#

allow play all;

}

}

}

http {

#include /nginx/conf/naxsi_core.rules;

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr:$remote_port - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

# # loadbalancing PHP

# upstream myLoadBalancer {

# server 127.0.0.1:9001 weight=1 fail_timeout=5;

# server 127.0.0.1:9002 weight=1 fail_timeout=5;

# server 127.0.0.1:9003 weight=1 fail_timeout=5;

# server 127.0.0.1:9004 weight=1 fail_timeout=5;

# server 127.0.0.1:9005 weight=1 fail_timeout=5;

# server 127.0.0.1:9006 weight=1 fail_timeout=5;

# server 127.0.0.1:9007 weight=1 fail_timeout=5;

# server 127.0.0.1:9008 weight=1 fail_timeout=5;

# server 127.0.0.1:9009 weight=1 fail_timeout=5;

# server 127.0.0.1:9010 weight=1 fail_timeout=5;

# least_conn;

# }

sendfile off;

#tcp_nopush on;

server_names_hash_bucket_size 128;

## Start: Timeouts ##

client_body_timeout 10;

client_header_timeout 10;

keepalive_timeout 30;

send_timeout 10;

keepalive_requests 10;

## End: Timeouts ##

#gzip on;

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

## Caching Static Files, put before first location

#location ~* .(jpg|jpeg|png|gif|ico|css|js)$ {

# expires 14d;

# add_header Vary Accept-Encoding;

#}

# For Naxsi remove the single # line for learn mode, or the ## lines for full WAF mode

location / {

#include /nginx/conf/mysite.rules; # see also http block naxsi include line

##SecRulesEnabled;

##DeniedUrl "/RequestDenied";

##CheckRule "$SQL >= 8" BLOCK;

##CheckRule "$RFI >= 8" BLOCK;

##CheckRule "$TRAVERSAL >= 4" BLOCK;

##CheckRule "$XSS >= 8" BLOCK;

root html;

index index.html index.htm;

}

# For Naxsi remove the ## lines for full WAF mode, redirect location block used by naxsi

##location /RequestDenied {

## return 412;

##}

## Lua examples !

# location /robots.txt {

# rewrite_by_lua '

# if ngx.var.http_host ~= "localhost" then

# return ngx.exec("/robots_disallow.txt");

# end

# ';

# }

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ .php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ .php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000; # single backend process

# fastcgi_pass myLoadBalancer; # or multiple, see example above

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl spdy;

# server_name localhost;

# ssl on;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_timeout 5m;

# ssl_prefer_server_ciphers On;

# ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

# ssl_ciphers ECDH+AESGCM:ECDH+AES256:ECDH+AES128:ECDH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!eNULL:!MD5:!DSS:!EXP:!ADH:!LOW:!MEDIUM;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}(4)、然后执行,开启流媒体服务

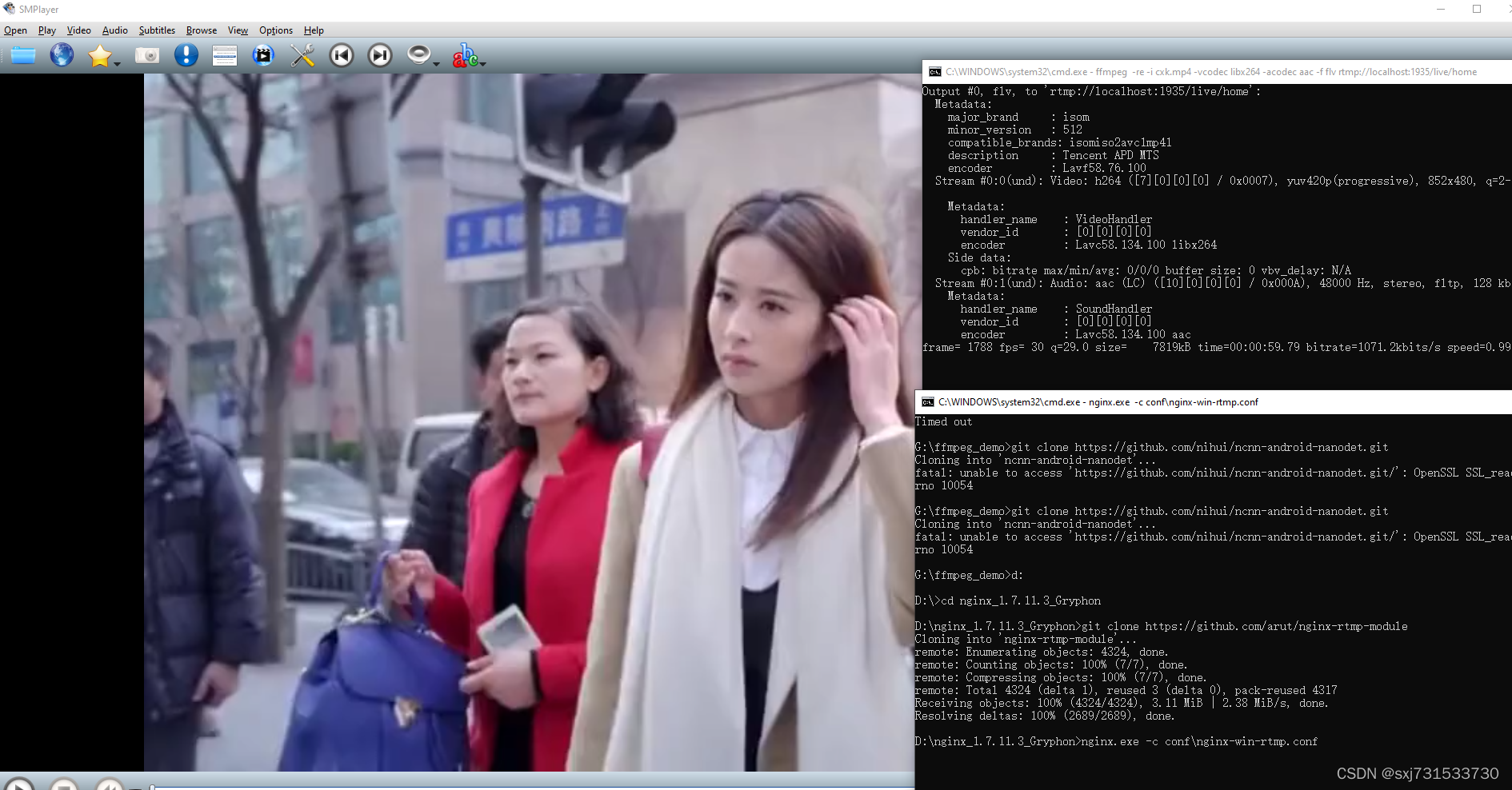

D:\nginx_1.7.11.3_Gryphon>nginx.exe -c conf\nginx-win-rtmp.conf(5)、使用ffmpeg推一个视频,使用smplayer播放器拉一下

G:\>ffmpeg -re -i cxk.mp4 -vcodec libx264 -acodec aac -f flv rtmp://localhost:1935/live/home

ffmpeg version 4.4-full_build-www.gyan.dev Copyright (c) 2000-2021 the FFmpeg developers

built with gcc 10.2.0 (Rev6, Built by MSYS2 project)

configuration: --enable-gpl --enable-version3 --enable-shared --disable-w32threads --disable-autodetect --enable-fontconfig --enable-iconv --enable-gnutls --enable-libxml2 --enable-gmp --enable-lzma --enable-libsnappy --enable-zlib --enable-librist --enable-libsrt --enable-libssh --enable-libzmq --enable-avisynth --enable-libbluray --enable-libcaca --enable-sdl2 --enable-libdav1d --enable-libzvbi --enable-librav1e --enable-libsvtav1 --enable-libwebp --enable-libx264 --enable-libx265 --enable-libxvid --enable-libaom --enable-libopenjpeg --enable-libvpx --enable-libass --enable-frei0r --enable-libfreetype --enable-libfribidi --enable-libvidstab --enable-libvmaf --enable-libzimg --enable-amf --enable-cuda-llvm --enable-cuvid --enable-ffnvcodec --enable-nvdec --enable-nvenc --enable-d3d11va --enable-dxva2 --enable-libmfx --enable-libglslang --enable-vulkan --enable-opencl --enable-libcdio --enable-libgme --enable-libmodplug --enable-libopenmpt --enable-libopencore-amrwb --enable-libmp3lame --enable-libshine --enable-libtheora --enable-libtwolame --enable-libvo-amrwbenc --enable-libilbc --enable-libgsm --enable-libopencore-amrnb --enable-libopus --enable-libspeex --enable-libvorbis --enable-ladspa --enable-libbs2b --enable-libflite --enable-libmysofa --enable-librubberband --enable-libsoxr --enable-chromaprint

libavutil 56. 70.100 / 56. 70.100

libavcodec 58.134.100 / 58.134.100

libavformat 58. 76.100 / 58. 76.100

libavdevice 58. 13.100 / 58. 13.100

libavfilter 7.110.100 / 7.110.100

libswscale 5. 9.100 / 5. 9.100

libswresample 3. 9.100 / 3. 9.100

libpostproc 55. 9.100 / 55. 9.100

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from 'cxk.mp4':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2avc1mp41

encoder : Lavf56.40.101

description : Tencent APD MTS

Duration: 00:01:02.98, start: 0.000000, bitrate: 1015 kb/s

Stream #0:0(und): Video: h264 (High) (avc1 / 0x31637661), yuv420p, 852x480, 817 kb/s, 30 fps, 30 tbr, 15360 tbn, 60 tbc (default)

Metadata:

handler_name : VideoHandler

vendor_id : [0][0][0][0]

Stream #0:1(und): Audio: aac (LC) (mp4a / 0x6134706D), 48000 Hz, stereo, fltp, 192 kb/s (default)

Metadata:

handler_name : SoundHandler

vendor_id : [0][0][0][0]

Stream mapping:

Stream #0:0 -> #0:0 (h264 (native) -> h264 (libx264))

Stream #0:1 -> #0:1 (aac (native) -> aac (native))

Press [q] to stop, [?] for help

[libx264 @ 0000026d74fc25c0] using cpu capabilities: MMX2 SSE2Fast SSSE3 SSE4.2 AVX FMA3 BMI2 AVX2

[libx264 @ 0000026d74fc25c0] profile High, level 3.1, 4:2:0, 8-bit

[libx264 @ 0000026d74fc25c0] 264 - core 161 r3048 b86ae3c - H.264/MPEG-4 AVC codec - Copyleft 2003-2021 - http://www.videolan.org/x264.html - options: cabac=1 ref=3 deblock=1:0:0 analyse=0x3:0x113 me=hex subme=7 psy=1 psy_rd=1.00:0.00 mixed_ref=1 me_range=16 chroma_me=1 trellis=1 8x8dct=1 cqm=0 deadzone=21,11 fast_pskip=1 chroma_qp_offset=-2 threads=12 lookahead_threads=2 sliced_threads=0 nr=0 decimate=1 interlaced=0 bluray_compat=0 constrained_intra=0 bframes=3 b_pyramid=2 b_adapt=1 b_bias=0 direct=1 weightb=1 open_gop=0 weightp=2 keyint=250 keyint_min=25 scenecut=40 intra_refresh=0 rc_lookahead=40 rc=crf mbtree=1 crf=23.0 qcomp=0.60 qpmin=0 qpmax=69 qpstep=4 ip_ratio=1.40 aq=1:1.00

Output #0, flv, to 'rtmp://localhost:1935/live/home':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2avc1mp41

description : Tencent APD MTS

encoder : Lavf58.76.100

Stream #0:0(und): Video: h264 ([7][0][0][0] / 0x0007), yuv420p(progressive), 852x480, q=2-31, 30 fps, 1k tbn (default)

Metadata:

handler_name : VideoHandler

vendor_id : [0][0][0][0]

encoder : Lavc58.134.100 libx264

Side data:

cpb: bitrate max/min/avg: 0/0/0 buffer size: 0 vbv_delay: N/A

Stream #0:1(und): Audio: aac (LC) ([10][0][0][0] / 0x000A), 48000 Hz, stereo, fltp, 128 kb/s (default)

Metadata:

handler_name : SoundHandler

vendor_id : [0][0][0][0]

encoder : Lavc58.134.100 aac

[flv @ 0000026d74f7bc40] Failed to update header with correct duration.9.4kbits/s speed=0.98x

[flv @ 0000026d74f7bc40] Failed to update header with correct filesize.

frame= 1319 fps= 30 q=-1.0 Lsize= 5876kB time=00:00:44.18 bitrate=1089.6kbits/s speed=0.997x

video:5122kB audio:694kB subtitle:0kB other streams:0kB global headers:0kB muxing overhead: 1.043856%

[libx264 @ 0000026d74fc25c0] frame I:7 Avg QP:21.82 size: 34098

[libx264 @ 0000026d74fc25c0] frame P:409 Avg QP:23.84 size: 8567

[libx264 @ 0000026d74fc25c0] frame B:903 Avg QP:27.87 size: 1663

[libx264 @ 0000026d74fc25c0] consecutive B-frames: 1.4% 17.7% 13.0% 67.9%

[libx264 @ 0000026d74fc25c0] mb I I16..4: 5.2% 80.1% 14.7%

[libx264 @ 0000026d74fc25c0] mb P I16..4: 1.3% 6.6% 1.4% P16..4: 42.6% 17.8% 8.1% 0.0% 0.0% skip:22.3%

[libx264 @ 0000026d74fc25c0] mb B I16..4: 0.1% 0.4% 0.1% B16..8: 31.3% 4.1% 0.9% direct: 0.9% skip:62.1% L0:45.1% L1:48.3% BI: 6.6%

[libx264 @ 0000026d74fc25c0] 8x8 transform intra:71.7% inter:74.9%

[libx264 @ 0000026d74fc25c0] coded y,uvDC,uvAC intra: 67.6% 58.7% 16.4% inter: 10.6% 8.3% 0.2%

[libx264 @ 0000026d74fc25c0] i16 v,h,dc,p: 41% 22% 5% 32%

[libx264 @ 0000026d74fc25c0] i8 v,h,dc,ddl,ddr,vr,hd,vl,hu: 32% 17% 10% 4% 6% 11% 6% 9% 6%

[libx264 @ 0000026d74fc25c0] i4 v,h,dc,ddl,ddr,vr,hd,vl,hu: 27% 28% 9% 4% 6% 10% 5% 7% 4%

[libx264 @ 0000026d74fc25c0] i8c dc,h,v,p: 51% 17% 25% 6%

[libx264 @ 0000026d74fc25c0] Weighted P-Frames: Y:0.2% UV:0.0%

[libx264 @ 0000026d74fc25c0] ref P L0: 63.1% 20.4% 12.9% 3.6% 0.0%

[libx264 @ 0000026d74fc25c0] ref B L0: 93.7% 5.5% 0.8%

[libx264 @ 0000026d74fc25c0] ref B L1: 98.9% 1.1%

[libx264 @ 0000026d74fc25c0] kb/s:954.19

[aac @ 0000026d76e992c0] Qavg: 376.109测试结果(注意电脑的防火墙一定要处于关闭状态)

测试结果

使用命令拉流

五、先使用代码在vs上测试一下环境可用性

main.cpp

#include <opencv2\opencv.hpp>

using namespace cv;

using namespace std;

#include "rtmpframe.h"

using namespace std;

using namespace cv;

int main()

{

RTMPFrame* rtmpItem = new RTMPFrame();

//读取视频或摄像头

VideoCapture capture("F:\\xck.mp4");

if (capture.isOpened()) {

std::cout << "video_width = " << capture.get(cv::CAP_PROP_FRAME_WIDTH) << std::endl;

std::cout << "video_height = " << capture.get(cv::CAP_PROP_FRAME_HEIGHT) << std::endl;

std::cout << "video_fps = " << capture.get(cv::CAP_PROP_FPS) << std::endl;

std::cout << "video_total_fps = " << capture.get(cv::CAP_PROP_FRAME_COUNT) << std::endl;

}

else {

std::cout << "the video file is normal" << std::endl;

return -1;

}

rtmpItem->rtmp_init(capture.get(cv::CAP_PROP_FRAME_WIDTH), capture.get(cv::CAP_PROP_FRAME_HEIGHT));

while (true)

{

Mat frame;

capture >> frame;

if (frame.empty())

break;

rtmpItem->rtmp_sender_frame(frame.data, frame.cols, frame.rows, frame.elemSize());

imshow("frame", frame);

waitKey(1);

}

delete rtmpItem;

return 0;

}rtmpframe.h

#pragma once

#pragma once

//

// Created by PHILIPS on 1/7/2022.

//

#ifndef NCNN_ANDROID_NANODET_RTMPFRAME_H

#define NCNN_ANDROID_NANODET_RTMPFRAME_H

#include<iostream>

extern "C"

{

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

#include <libavutil/imgutils.h>

#include <libswscale/swscale.h>

}

class RTMPFrame {

public:

RTMPFrame();

~RTMPFrame();

public:

//1 、推流地址

const char* out_url = "rtmp://192.168.10.151:1935/live/livestream";

private:

//2 、初始化上下文

SwsContext* sws_context;

// 3、输出的数据结构

AVFrame* yuv;

// 4 初始化编码器

AVCodec* codec;

//5、初始化编码器上下文

AVCodecContext* codec_context;

//6、初始化编码器选项

AVDictionary* codec_options;

//初始化格式上下文

AVFormatContext* format_context;

//

AVStream* vs;

//

AVPacket pack;

int fps;

int vpts;

uint8_t* in_data[AV_NUM_DATA_POINTERS] = { 0 };

int in_size[AV_NUM_DATA_POINTERS] = { 0 };

char buf[1024] = { 0 };

public:

int rtmp_sender_frame(unsigned char* frameData, int frameCols, int frameRows, int frameElemSize);

int rtmp_init(int width, int height);

};

#endif //NCNN_ANDROID_NANODET_RTMPFRAME_H

rtmpframe.cpp

//

// Created by sxj731533730 on 1/7/2022.

//

#include "rtmpframe.h"

RTMPFrame::RTMPFrame() {

//1、初始化推流地址

//2 、初始化上下文

sws_context = NULL;

// 3、输出的数据结构

yuv = NULL;

// 4 初始化编码器

codec = NULL;

//5、初始化编码器上下文

codec_context = NULL;

//6、初始化编码器选项

codec_options = NULL;

//初始化格式上下文

format_context = NULL;

//

vs = NULL;

//

fps = 25;

vpts = 0;

}

RTMPFrame::~RTMPFrame() {

av_dict_free(&codec_options);

avcodec_free_context(&codec_context);

av_frame_free(&yuv);

avio_close(format_context->pb);

avformat_free_context(format_context);

sws_freeContext(sws_context);

}

int RTMPFrame::rtmp_init(int width, int height) {

// 注册所有网络协议

avformat_network_init();

// 2.初始化格式转换上下文

sws_context = sws_getCachedContext(sws_context,

width, height, AV_PIX_FMT_BGR24, // 源格式

width, height, AV_PIX_FMT_YUV420P, // 目标格式

SWS_BICUBIC, // 尺寸变化使用算法

0, 0, 0);

if (NULL == sws_context) {

std::cout << "sws_getCachedContext error" << std::endl;

return -1;

}

// 3.初始化输出的数据结构

yuv = av_frame_alloc();

yuv->format = AV_PIX_FMT_YUV420P;

yuv->width = width;

yuv->height = height;

yuv->pts = 0;

// 分配yuv空间

int ret_code = av_frame_get_buffer(yuv, 32);

if (0 != ret_code) {

std::cout << "yuv init error" << std::endl;

return -1;

}

// 4.初始化编码上下文

// 4.1找到编码器

const AVCodec* codec = avcodec_find_encoder(AV_CODEC_ID_H264);

if (NULL == codec) {

std::cout << "Can't find h264 encoder" << std::endl;

return -1;

}

// 4.2创建编码器上下文

codec_context = avcodec_alloc_context3(codec);

if (NULL == codec_context) {

std::cout << "avcodec_alloc_context3 failed" << std::endl;

return -1;

}

// 4.3配置编码器参数

// vc->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

codec_context->codec_id = codec->id;

codec_context->thread_count = 8;

// 压缩后每秒视频的bit流 50k

codec_context->bit_rate = 50 * 1024 * 8;

codec_context->width = width;

codec_context->height = height;

codec_context->time_base = { 1, fps };

codec_context->framerate = { fps, 1 };

// 画面组的大小,多少帧一个关键帧

codec_context->gop_size = 50;

codec_context->max_b_frames = 0;

codec_context->pix_fmt = AV_PIX_FMT_YUV420P;

codec_context->qmin = 10;

codec_context->qmax = 51;

//(baseline | high | high10 | high422 | high444 | main)

av_dict_set(&codec_options, "profile", "baseline", 0);

av_dict_set(&codec_options, "preset", "superfast", 0);

av_dict_set(&codec_options, "tune", "zerolatency", 0);

// 4.4打开编码器上下文

ret_code = avcodec_open2(codec_context, codec, &codec_options);

if (0 != ret_code) {

av_strerror(ret_code, buf, sizeof(buf));

std::cout << "avcodec_open2 format_context failed" << std::endl;

return -1;

}

// 5.输出封装器和视频流配置

// 5.1创建输出封装器上下文

// rtmp flv封装器

ret_code = avformat_alloc_output_context2(&format_context, 0, "flv", out_url);

if (0 != ret_code) {

av_strerror(ret_code, buf, sizeof(buf));

std::cout << " avformat_alloc_output_context2 format_context error!" << std::endl;

return -1;

}

// 5.2添加视频流

vs = avformat_new_stream(format_context, NULL);

if (NULL == vs) {

std::cout << "avformat_new_stream avformat_new_stream failed" << std::endl;

return -1;

}

vs->codecpar->codec_tag = 0;

// 从编码器复制参数

avcodec_parameters_from_context(vs->codecpar, codec_context);

av_dump_format(format_context, 0, out_url, 1);

// 打开rtmp 的网络输出IO

ret_code = avio_open(&format_context->pb, out_url, AVIO_FLAG_WRITE);

if (0 != ret_code) {

av_strerror(ret_code, buf, sizeof(buf));

std::cout << "avio_open format_context failed" << std::endl;

return -1;

}

// 写入封装头

ret_code = avformat_write_header(format_context, NULL);

if (0 != ret_code) {

av_strerror(ret_code, buf, sizeof(buf));

std::cout << "avformat_write_header format_context fail" << std::endl;

return -1;

}

memset(&pack, 0, sizeof(pack));

std::cout << "init rtmp successfully" << std::endl;

return 0;

}

int RTMPFrame::rtmp_sender_frame(unsigned char* frameData, int frameCols, int frameRows, int frameElemSize) {

if (frameData == NULL)

{

std::cout << "currentFrame is null" << std::endl;

return -1;

}

// rgb to yuv

in_data[0] = frameData;

// 一行(宽)数据的字节数

in_size[0] = frameCols * frameElemSize;

int ret_code = sws_scale(sws_context, in_data, in_size, 0, frameRows,

yuv->data, yuv->linesize);

if (ret_code <= 0) {

av_strerror(ret_code, buf, sizeof(buf));

std::cout << "sws_context is fail " << std::endl;

return -1;

}

// h264编码

yuv->pts = vpts;

vpts++;

if (vpts < 0)

{

vpts = 0;

}

ret_code = avcodec_send_frame(codec_context, yuv);

if (0 != ret_code) {

av_strerror(ret_code, buf, sizeof(buf));

std::cout << "codec_context is fail " << std::endl;

return -1;

}

ret_code = avcodec_receive_packet(codec_context, &pack);

if (0 != ret_code || pack.buf != nullptr) {//

;

}

else {

av_strerror(ret_code, buf, sizeof(buf));

std::cout << "avcodec_receive_packet is fail " << std::endl;

return -1;

}

// 推流

pack.pts = av_rescale_q(pack.pts, codec_context->time_base, vs->time_base);

pack.dts = av_rescale_q(pack.dts, codec_context->time_base, vs->time_base);

pack.duration = av_rescale_q(pack.duration,

codec_context->time_base,

vs->time_base);

ret_code = av_interleaved_write_frame(format_context, &pack);

if (0 != ret_code)

{

av_strerror(ret_code, buf, sizeof(buf));

std::cout << "av_interleaved_write_frame format_context is fail " << std::endl;

return -1;

}

av_packet_unref(&pack);

std::cout << "rtmp frame successfully " << std::endl;

return 0;

}

测试结果

六、然后开始集成Android的推流代码黏上就行 ,参考我这篇博客 代码改一下就行 8、Linuix\Android进行视频获取和推流服务器(FFMPEG4.4&Android7.0)_sxj731533730-CSDN博客_android从服务器获取视频

在AndroidManifest.xml中添加获取网络权限

<uses-permission android:name="android.permission.INTERNET"> </uses-permission>然后Android代码集成进去就行

贴个up的接口文件nanodetncnn.cpp

// Tencent is pleased to support the open source community by making ncnn available.

//

// Copyright (C) 2021 THL A29 Limited, a Tencent company. All rights reserved.

//

// Licensed under the BSD 3-Clause License (the "License"); you may not use this file except

// in compliance with the License. You may obtain a copy of the License at

//

// https://opensource.org/licenses/BSD-3-Clause

//

// Unless required by applicable law or agreed to in writing, software distributed

// under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR

// CONDITIONS OF ANY KIND, either express or implied. See the License for the

// specific language governing permissions and limitations under the License.

#include <android/asset_manager_jni.h>

#include <android/native_window_jni.h>

#include <android/native_window.h>

#include <android/log.h>

#include <jni.h>

#include <string>

#include <vector>

#include <platform.h>

#include <benchmark.h>

#include "nanodet.h"

#include "ndkcamera.h"

#include "rtmpframe.h"

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#if __ARM_NEON

#include <arm_neon.h>

#endif // __ARM_NEON

static int draw_unsupported(cv::Mat& rgb)

{

const char text[] = "unsupported";

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 1.0, 1, &baseLine);

int y = (rgb.rows - label_size.height) / 2;

int x = (rgb.cols - label_size.width) / 2;

cv::rectangle(rgb, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(rgb, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 0));

return 0;

}

static int draw_fps(cv::Mat& rgb)

{

// resolve moving average

float avg_fps = 0.f;

{

static double t0 = 0.f;

static float fps_history[10] = {0.f};

double t1 = ncnn::get_current_time();

if (t0 == 0.f)

{

t0 = t1;

return 0;

}

float fps = 1000.f / (t1 - t0);

t0 = t1;

for (int i = 9; i >= 1; i--)

{

fps_history[i] = fps_history[i - 1];

}

fps_history[0] = fps;

if (fps_history[9] == 0.f)

{

return 0;

}

for (int i = 0; i < 10; i++)

{

avg_fps += fps_history[i];

}

avg_fps /= 10.f;

}

char text[32];

sprintf(text, "FPS=%.2f", avg_fps);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int y = 0;

int x = rgb.cols - label_size.width;

cv::rectangle(rgb, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(rgb, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

return 0;

}

static NanoDet* g_nanodet = 0;

static RTMPFrame *g_rtmpframe=0;

static ncnn::Mutex lock;

class MyNdkCamera : public NdkCameraWindow

{

public:

virtual void on_image_render(cv::Mat& rgb) const;

};

void MyNdkCamera::on_image_render(cv::Mat& rgb) const

{

// nanodet

{

ncnn::MutexLockGuard g(lock);

if (g_nanodet)

{

std::vector<Object> objects;

g_nanodet->detect(rgb, objects);

g_nanodet->draw(rgb, objects);

if(g_rtmpframe){

cv::Mat rgbFrame;

cv::resize(rgb,rgbFrame,cv::Size(1920,1080));

g_rtmpframe->rtmp_sender_frame(rgbFrame.data,rgbFrame.cols,rgbFrame.rows,rgbFrame.elemSize());

}

}

else

{

draw_unsupported(rgb);

}

}

draw_fps(rgb);

}

static MyNdkCamera* g_camera = 0;

extern "C" {

JNIEXPORT jint JNI_OnLoad(JavaVM* vm, void* reserved)

{

__android_log_print(ANDROID_LOG_DEBUG, "ncnn", "JNI_OnLoad");

g_camera = new MyNdkCamera;

return JNI_VERSION_1_4;

}

JNIEXPORT void JNI_OnUnload(JavaVM* vm, void* reserved)

{

__android_log_print(ANDROID_LOG_DEBUG, "ncnn", "JNI_OnUnload");

{

ncnn::MutexLockGuard g(lock);

delete g_nanodet;

g_nanodet = 0;

delete g_rtmpframe;

g_rtmpframe=0;

}

delete g_camera;

g_camera = 0;

}

// public native boolean loadModel(AssetManager mgr, int modelid, int cpugpu);

JNIEXPORT jboolean JNICALL Java_com_tencent_nanodetncnn_NanoDetNcnn_loadModel(JNIEnv* env, jobject thiz, jobject assetManager, jint modelid, jint cpugpu)

{

if (modelid < 0 || modelid > 6 || cpugpu < 0 || cpugpu > 1)

{

return JNI_FALSE;

}

AAssetManager* mgr = AAssetManager_fromJava(env, assetManager);

__android_log_print(ANDROID_LOG_DEBUG, "ncnn", "loadModel %p", mgr);

const char* modeltypes[] =

{

"m",

"m-416",

"g",

"ELite0_320",

"ELite1_416",

"ELite2_512",

"RepVGG-A0_416"

};

const int target_sizes[] =

{

320,

416,

416,

320,

416,

512,

416

};

const float mean_vals[][3] =

{

{103.53f, 116.28f, 123.675f},

{103.53f, 116.28f, 123.675f},

{103.53f, 116.28f, 123.675f},

{127.f, 127.f, 127.f},

{127.f, 127.f, 127.f},

{127.f, 127.f, 127.f},

{103.53f, 116.28f, 123.675f}

};

const float norm_vals[][3] =

{

{1.f / 57.375f, 1.f / 57.12f, 1.f / 58.395f},

{1.f / 57.375f, 1.f / 57.12f, 1.f / 58.395f},

{1.f / 57.375f, 1.f / 57.12f, 1.f / 58.395f},

{1.f / 128.f, 1.f / 128.f, 1.f / 128.f},

{1.f / 128.f, 1.f / 128.f, 1.f / 128.f},

{1.f / 128.f, 1.f / 128.f, 1.f / 128.f},

{1.f / 57.375f, 1.f / 57.12f, 1.f / 58.395f}

};

const char* modeltype = modeltypes[(int)modelid];

int target_size = target_sizes[(int)modelid];

bool use_gpu = (int)cpugpu == 1;

// reload

{

ncnn::MutexLockGuard g(lock);

if (use_gpu && ncnn::get_gpu_count() == 0)

{

// no gpu

delete g_nanodet;

g_nanodet = 0;

delete g_rtmpframe;

g_rtmpframe=0;

}

else

{

if (!g_nanodet)

g_nanodet = new NanoDet;

g_nanodet->load(mgr, modeltype, target_size, mean_vals[(int)modelid], norm_vals[(int)modelid], use_gpu);

if (!g_rtmpframe)

g_rtmpframe = new RTMPFrame;

g_rtmpframe->rtmp_init( 1920,1080);

}

}

return JNI_TRUE;

}

// public native boolean openCamera(int facing);

JNIEXPORT jboolean JNICALL Java_com_tencent_nanodetncnn_NanoDetNcnn_openCamera(JNIEnv* env, jobject thiz, jint facing)

{

if (facing < 0 || facing > 1)

return JNI_FALSE;

__android_log_print(ANDROID_LOG_DEBUG, "ncnn", "openCamera %d", facing);

g_camera->open((int)facing);

return JNI_TRUE;

}

// public native boolean closeCamera();

JNIEXPORT jboolean JNICALL Java_com_tencent_nanodetncnn_NanoDetNcnn_closeCamera(JNIEnv* env, jobject thiz)

{

__android_log_print(ANDROID_LOG_DEBUG, "ncnn", "closeCamera");

g_camera->close();

return JNI_TRUE;

}

// public native boolean setOutputWindow(Surface surface);

JNIEXPORT jboolean JNICALL Java_com_tencent_nanodetncnn_NanoDetNcnn_setOutputWindow(JNIEnv* env, jobject thiz, jobject surface)

{

ANativeWindow* win = ANativeWindow_fromSurface(env, surface);

__android_log_print(ANDROID_LOG_DEBUG, "ncnn", "setOutputWindow %p", win);

g_camera->set_window(win);

return JNI_TRUE;

}

}

另外两个文件填进去,cmakelists.txt引用一下就好

手机端的测试结果

电脑端在开启的nginx过程中,拉取得流进行实时播放 测试结果 window10 拉流有点失真,原因在于手机显示画面是480*640 我拉成了1920*1080 失真了

代码上传github,分为一个我编译的ffmpeg第三方库和一个推流的代码

ffmpeg4.4库: https://github.com/sxj731533730/ffmpeg4.4-mobile-20220107-android.git

推流实时检测代码:https://github.com/sxj731533730/ncnn-ffmpeg-android-nanodet.git

参考:

GitHub - nihui/ncnn-android-nanodet

Releases · Tencent/ncnn · GitHub

解决Android NDK编译FFmpeg 4.2.2的x86 cpu版时的问题 – K-Res的Blog

8、Linuix\Android进行视频获取和推流服务器(FFMPEG4.4&Android7.0)_sxj731533730-CSDN博客_android从服务器获取视频

版权声明:本文为CSDN博主「sxj731533730」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/sxj731533730/article/details/122270676

暂无评论